Machinic automation in the process of text and music composition:The versificator

- Updated April 2024

The idea for the piece “Versificator – Render 3” comes originally as a metaphor for the versificator created by George Orwell in the novel “1984” whose main purpose was to act as an automatic generator of literature and music. I am an avid consumer of science fiction literature and films, and while thinking of automated music generation as an instrument of alienation in dystopian literature from the middle 20th century – in particular in Orwell and Huxley, I came up with a thought: what if there was something artistically valuable that could come out of these machines? what if creative machinic automation, instead of being used as an instrument of alienation, could be used as a compositional tool that could contribute to creating interesting musical works? The result of the implementation of a partially-automated compositional workflow and its creative exploration is the piece “Versificator – Render 3”, for vocal ensemble. In the novel “1984”, the versificator plays a role as a tool of social control. In this work, the metaphor for the versificator is reframed as a tool employed to artistically investigate music and text composition through the interplay between an automated but still dynamic cycle of generative exploration, in dialog with the creative subjectivity of a composer.

Keywords: Versificator, Orwell, Computer-aided composition, Constraint Algorithms

Background

The inspiration for this composition lies in the still-relevant-nowadays literary works of early to mid-20th century writers Aldous Huxley and George Orwell. As I see it, their explorations of technological dystopias are far from relics of the past: they continue to resonate strongly today, as evidenced by a mounting pile of subsequent literature and filmography that echoes their themes of totalitarianism, technology, alienation, and rebellion. In this sense, I feel that the writings of Orwell and Huxley should persistently resonate within the collective consciousness, and ultimately, the reflections on the idea for the piece and its workflow should also welcome discussion around these overarching socio-cultural issues.

Automated music

George Orwell’s “1984” (Orwell 2023) and Aldous Huxley’s “Brave New World” (Huxley 2013) provide distinct yet related dystopian visions of a futuristic – for the time the books were written – Western world. Orwell’s narrative is set in a future where a totalitarian regime maintains power through strict control and mass surveillance, while Huxley’s world presents a technologically advanced society with caste divisions based on genetic manipulation and psychological conditioning. Both authors have erased historical and cultural legacies in their respective societies, however, they give artificially generated music an important role in their narratives. Orwell’s “1984” features the versificator, an obscure device used by the Ministry of Truth to produce cultural content, media, and entertainment, including automated song and lyric generation, without human intervention. Similarly, in “Brave New World”, music, generated by the synthetic music machines, serves as entertainment and promotes societal conformism, preventing people from challenging the prevailing order. Both authors attribute these mechanisms certain power as tools for social control. The music from both Orwell’s and Huxley’s generative devices, the versificator and the synthetic music machines, is portrayed as low-quality, with simplistic melodies and cliché lyrics. Unfortunately, we don’t have access to the sound imagination of Huxley and Orwell to have a better idea of the actual audio realization of these songs[1], but it is clear that both authors equate mechanically produced music with a precarious outcome that mainly serves the purpose of social alienation.

- [1] It is possible to hear a proletarian singing one of the versificator’s sons in the movie “1984” (1984) by M. Radford

Artistic inquiries

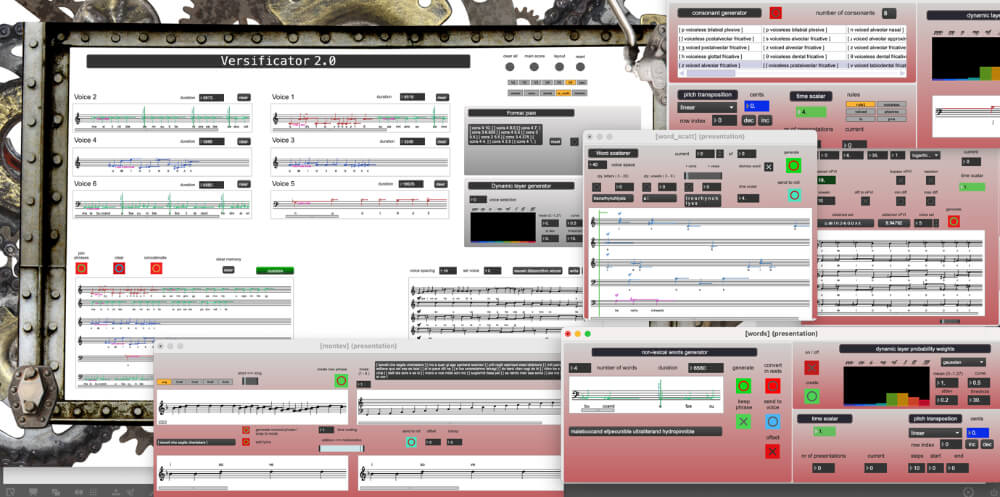

Overall, my versificator is a modular system that simultaneously generates and sonifies machine-generated text using AI rule-based methods. This process automatizes a large part of the generation of musical information in the form of pitches, durations, dynamics, and vocal articulations. In addition, the system provides the possibility of automatizing the formal structure of the piece and the temporal and textural disposition of the musical material, by carrying out highly complex stochastic calculations. The system thus, facilitates diverse processes of musical generation, transformation, and concatenation by solely changing input parameters. However, this dependence on automated processes raises multiple inquiries, especially about the musical aspects that escape the computational formalization of the system. Can we dismiss these elements during the music creation process? On the other hand, are the parameters that the system can operate compositionally enough to produce music with depth and significance? Is there still space for subjectivity within the system’s computational framework? And what about the potential for defiance of the system? If it exists, in what ways might it be investigated and utilized?

Implementation

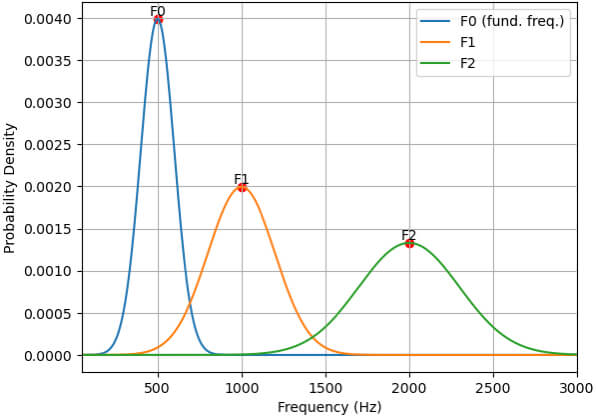

Initially, I thought of a system that could generate some text and from this text, automatically retrieve some music. I decided to try to create some non-sensical poetry, and from it, generate a musical layer (pitch and durations) based on its phonological content. This in a way resembles the functioning of a Text-To-Speech (TTS) system. A TTS system generally receives some text input and translates it into the sound of a synthetic voice. This translation relies on the artificial recreation of acoustic information from the text by mapping letters to sounds with a particular spectral characteristic which makes us recognize them as speech sounds[2]. For example, to synthesize a vowel, the TTS system should contain information on its spectral structure in the form of the fundamental frequency, formants, and durations and use this information to synthesize it. In the case of my system, instead of synthetic speech, the result is a musical unit that maps the spectral structure of a phoneme into musical symbolic representation.

- [2] Probably the most widely used TTS synthesis method from the 80s onwards was a type of synthesizer developed especially after the works of Dennis Klatt: the Klatt synthesized (Klatt 1980).

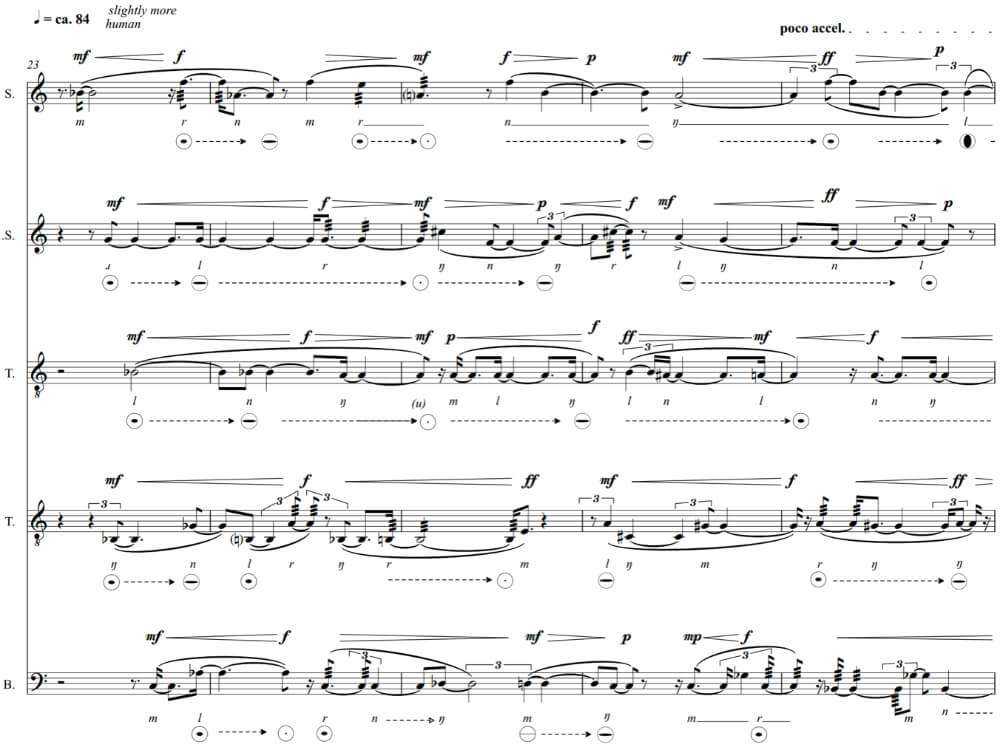

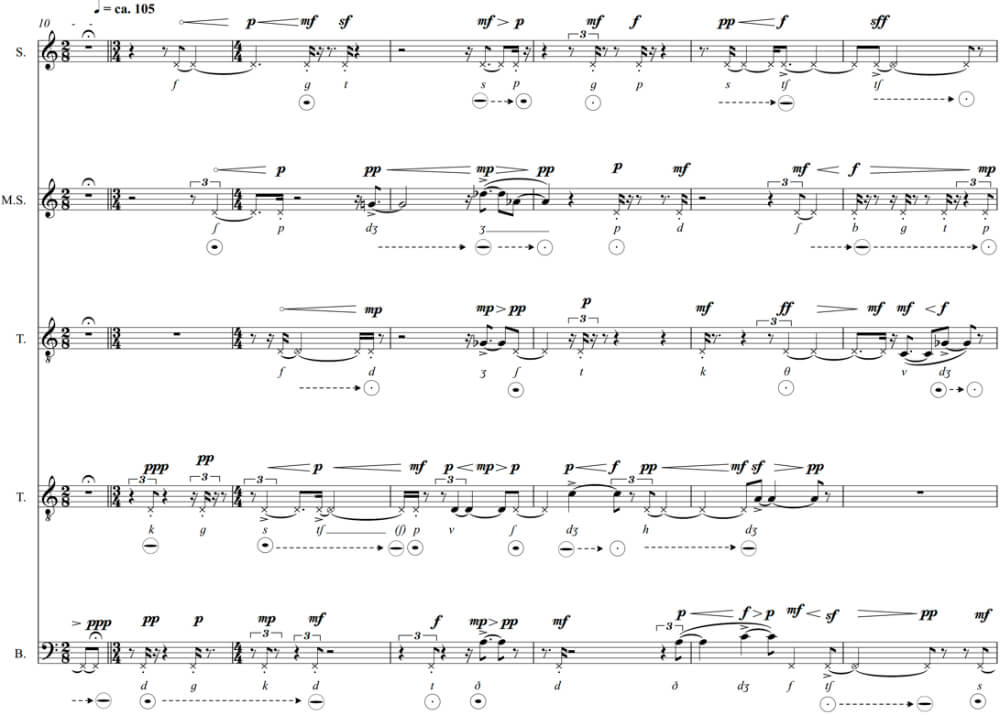

The integral musical material is the phonological content of an imaginary language that nurtures itself with a merging of phonemes coming from Latin and English (see performance notes, pages. 1 and 2 of the score). This phonological world materializes in three forms, as nonsensical words, and only vocalic or consonant sounds. Each of these forms comes out from three different generative modules. The system relies mainly on these three text generator modules plus a formal operator module. Within each module exists a complex interaction between multiple constraint rules of different natures. The final complexity is derived from the chaining of simple rules. The outcome of each module is a musical unit – let’s call it a musical phrase – consisting of both the generated text as the uttered text for a vocal part, and its sonification as musical symbolic information in the form of pitches, durations, and vocal articulations.

The versificator consists of a large Max[3] patch containing several subpatches. The main patch is where the main global view and functionality are located, and each subpatcher is a generative module. The core functionalities are built mainly upon two Max external libraries: Bach[4] and MOZ’Lib[5]. Among many useful tools for computer-assisted composition, the Bach library provides a well-developed music notation interface. The library MOZ’Lib contains an implementation of PatchWorksConstraints, a LISP-based constraints-solving engine developed by Mikael Larsson (Laurson 1996) for the software PWGL and ported to Max by Örjan Sandred and Julien Vincenot.

- [3] Max 8 is a musical programming language in the form of a patching environment. It is available at https://cycling74.com.

- [4]https://bachproject.net.

- [5]https://github.com/JulienVincenot/MOZLib.

Compositional method

In the versificator, the process of composition occurs almost simultaneously with the process of generation, at least for the part of the process that occurs inside the system. This means that the generation of musical material carries itself a compositional logic. Mainly, the parameters that govern the generation and organization of the musical material both at the micro and the macro level have been formalized as constraint rules enforced by a constraint algorithm.

The way that constraint algorithms work is rather simple. One determines a domain, or a search space, consisting of a set of musical elements, one determines some rules for a particular musical organization for these elements, and the algorithm sorts the musical elements by enforcing this rule or set of rules. The algorithm evaluates iteratively every possible combination of elements until it finds a solution or multiple solutions, or until it finds none. The rules are usually expressed as logical statements and each candidate solution will be evaluated as true or false. Those evaluated as true are accepted and returned to the user, and those evaluated as false are rejected. In the field of contemporary music, particularly in computer-assisted composition workflows, constrained programming and its implementation for music composition as constraint algorithms have a very long history, starting with its implementations in the Illiac Suite[6] and later in the work of other relevant contemporary composers, such as Magnus Lindberg and Örjan Sandred[7].

- [6] The Illiac Suite (1957), a piece for string quartet by Lejaren Hiller is conventionally agreed to be the first musical work composed using computational algorithms in the ILLIAC computer, in the University of Illinois.

- [7] Örjan Sandred has written a significant amount of literature on the development and implementation of constraint algorithms as tools in computer-assisted composition workflows (Sandred 2009), (Sandred 2010), (Sandred 2017).

Within the versificator, I have implemented mainly two types of rules. The first type operates at the level of each individual generative module, constraining the generation of some text and its sonification in the form of musical symbolic information. The second type operates at the global formal level, involving the temporal distribution and textural organization of the output of each module.

First module: Non-sensical canon[8]

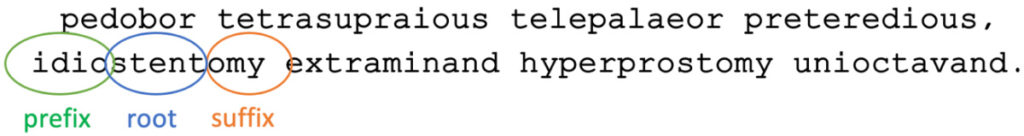

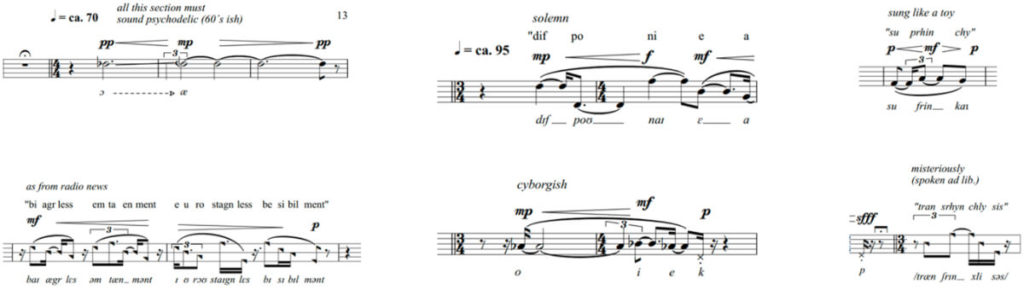

The first module generates individual or sequences of sung nonsense words. A nonsense word or a pseudoword is a unit of speech or text that appears to be a real word in a given language, as its construction follows the phonotactic rules of the language in question, although it has no meaning, or it doesn’t exist in the lexicon. The module generates pseudowords are the result of rule-based combinations of prefixes, roots, and suffixes of English words. Some rules that govern the generation of pseudowords have to do with the use of rhyme patterns[9], alliteration, number of vowels or consonants, or proportion between them. For example, depending on how many words one generates – up to a maximum of 4 – the rhyme pattern change (e.g. 2 words: aa; 3 words: aba; 4 words: abab.).

- [8] The non-sensical canon text generator is inspired by a program named “Words without sense” created by the artist Mario Guzmán (mario-guzman.com), which outputs random combinations of prefixes, suffixes, and roots in Spanish or English. My version builds upon Guzman’s work by adding the possibility of generating text using constraint rules.

- [9] A rhyme pattern applies to the ending of consecutive words. This is different from the notion of rhyme scheme, which applies to the final word of a stanza containing a determined number of syllables.

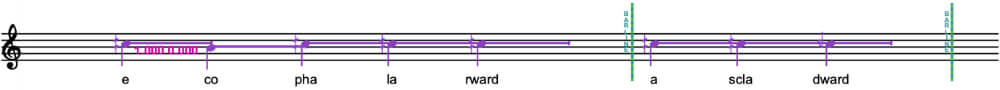

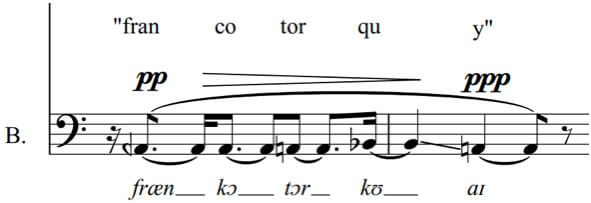

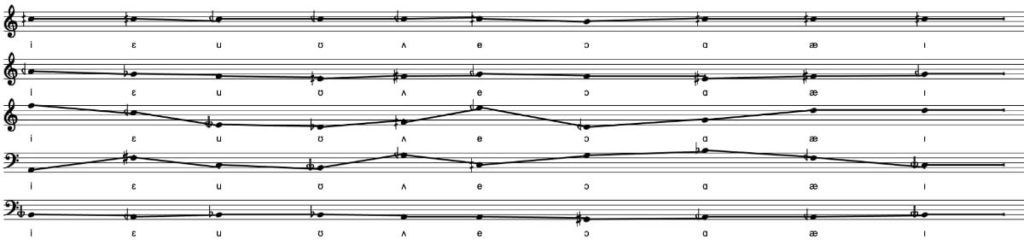

The symbolic sonification of the word occurs in stages. First, the word is automatically hyphenated, and a musical pitch is given to each vowel in a syllable – similar to almost any sung text. These notes come from a database that contains information about the formant frequencies and duration of English language vocalic sound measurements. (Hillenbrand et al. 1995). A different formant pitch will be assigned to each voice in the ensemble. The fundamental frequency will always appear in the lower voice, and the higher formants will appear successively in higher voices. The number of voices that should sing a word will determine how many notes from the formant structure should be sonified. The words thus are sung syllabically.

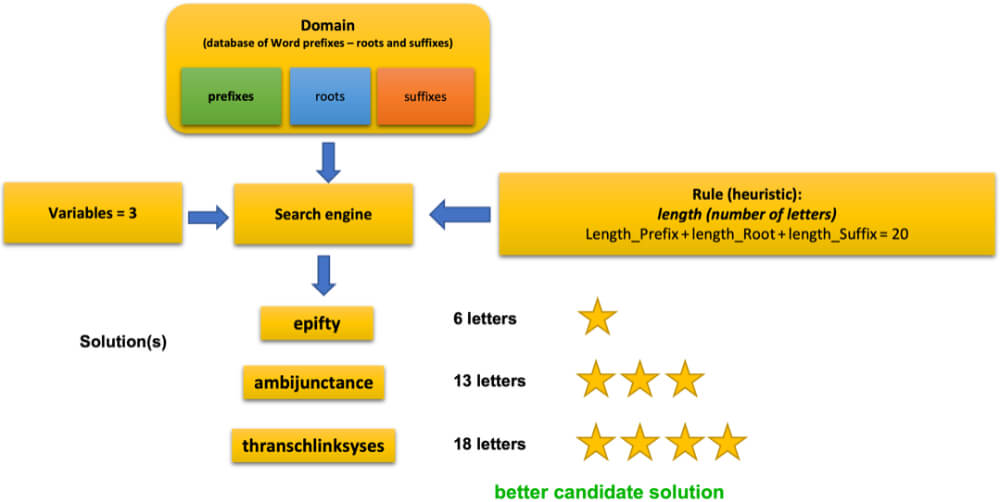

Below is illustrated how a heuristic rule[10] that controls the number of letters for a word works. The search engine receives three variables: a prefix, a root, and a suffix for a word. The rule tells the engine to count letters for a different combination of variables and find the combination that is closer to 20.

- [10] The concept of heuristic and deterministic rules are explained in “methods” – constraint algorithms.

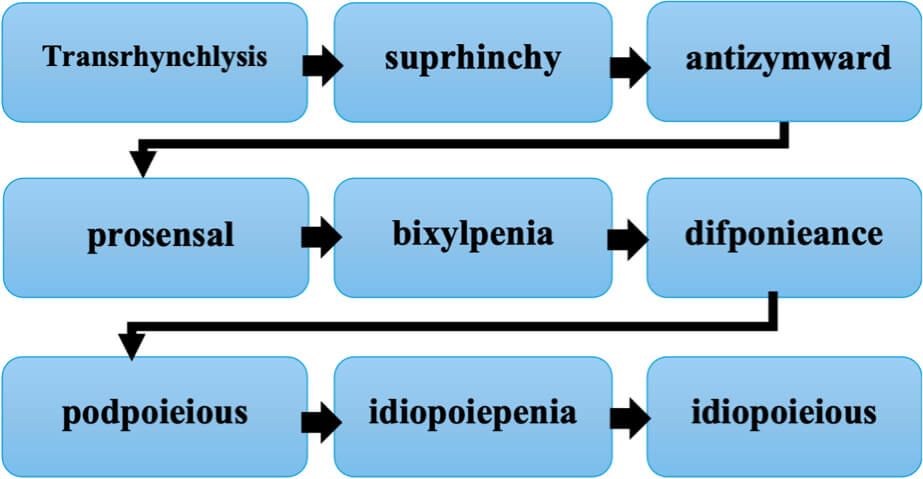

Another example of a compositionally driven generation that can be achieved by implementing heuristic rules is the construction of a set of words that have a variable proportion between vowels and consonants. Below is shown a sequence of words with a decreasing proportion of consonants (m. 39–90 of the score):

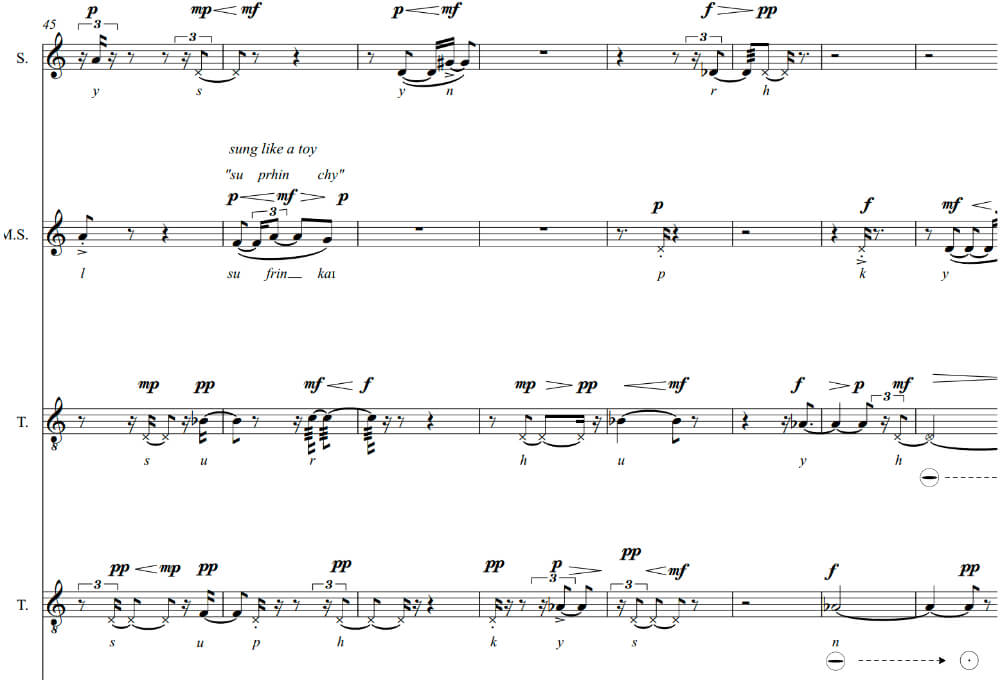

Another functionality of the non-sensical canon module allows me to scatter each word along the vocal texture. As a result, it is possible to hear the word in one of the voices, and splinters of it in the rest of the texture (mm. 45–53 of the score).

The “vowel-choral” module

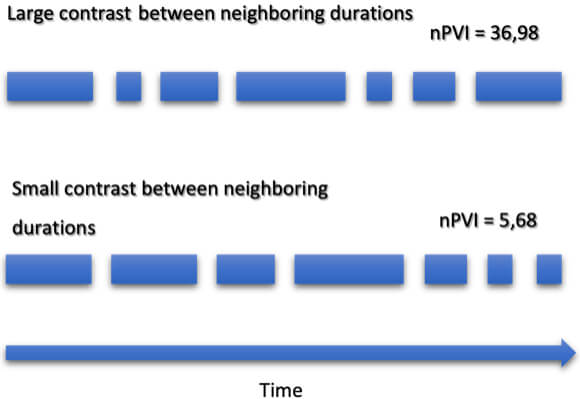

The second module, the “vowel-choral” module, generates a sequence of vocalic sounds, by ordering sequentially a set of predefined vocalic IPA symbols. As the source for the sonification of these vocalic sounds, I use again the database of formants and durations from Hillendbrand’s study. However, I have added another parameter for constraining the generation of sequences, and that is the contrastiveness between successive durations, as measured using the nPVI index (Nolan and Asu 2009; Grabe et al. 2000). In short, the module generates sequences in the form of choral in which each successive vocalic sound is more or less contrastive in terms of duration, and the constraint engine facilitates this by allowing the generation of sequences with a desired nPVI ranging from 5. (less contrasting) to 40. (more contrasting). Some other complementary rules may be activated, such as the possibility of repetition or not of any symbol, and the length of the sequence, which can range from 2 to 12.

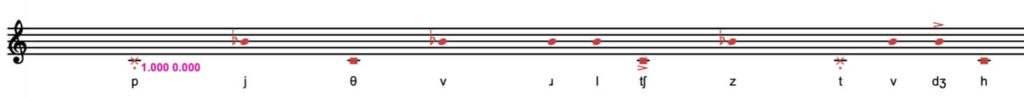

The Consonant Cloud module

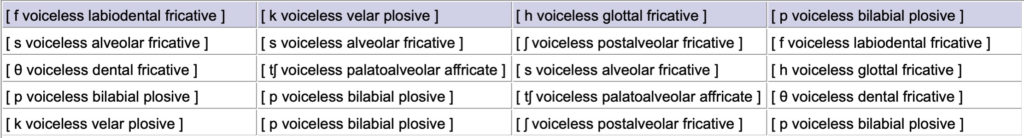

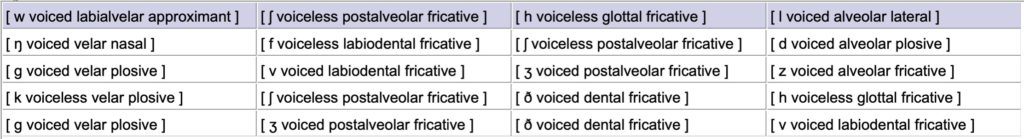

The third module, the “consonant cloud”, generates sequences of consonants. The rules that constrain the generation of these sequences are based on phonetic features. According to the IPA chart[11], consonants can be classified according to (i) phonation – as voiced or unvoiced –; (ii) place of articulation – as bilabial, alveolar, velar, labiodental, dental, postalveolar, palatoalveolar and postalveolar – and (iii) manner of articulation – as plosives, nasals, fricatives, and affricatives. These constraint rules also depend on the number of parallel sequences that should be generated, each of them mapped to a voice of the ensemble. For example, it is possible to constraint a sequence to have four parallel lines containing only unvoiced sounds:

Below is an example of a more complex rule, where independent lines are constrained to share some phonetic quality:

- Voice 1 and 2: equal phonation.

- Voice 3 and 4: equal place articulation.

- Voice 4 and 5: equal manner or articulation

Unvoiced consonants are notated as unpitched sounds. Voiced consonants are notated using pitches coming from the formant structure of a neutral vowel “schwa” (/ə/).

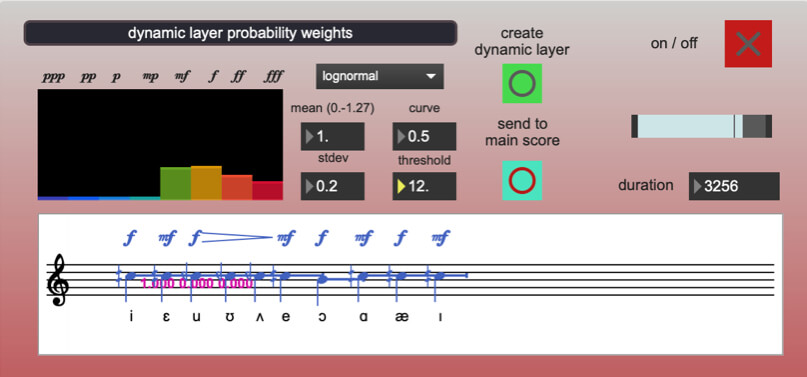

Dynamics submodule

Within each module, there is a dynamics generator submodule, that allows the creation of a dynamic layer for the generated phrase. This layer is conceived in a probabilistic way, namely, the submodule allows the creation of a probability distribution for all possible dynamics within a range (ppp to fff). Once this distribution is established, a dynamic layer can be created for each phrase or section (there is also a “general” dynamics submodule in the main user interface screen).

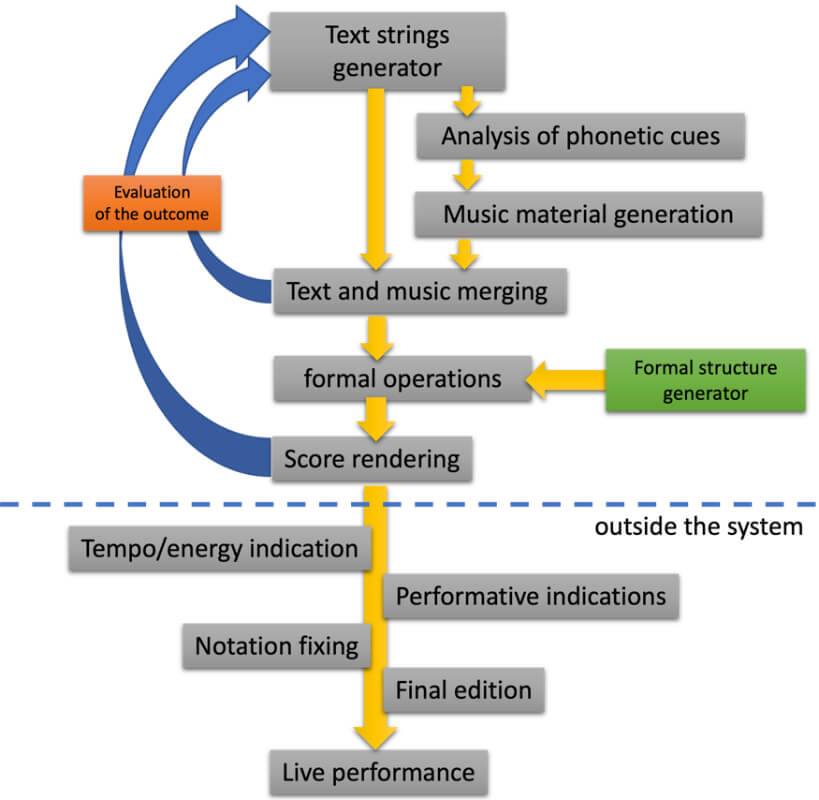

Generation/composition flow

As it can be appreciated, my versificator does not work as a fully automated music score generator but rather proposes an iterative creation process where each generative module produces an output, and if this is non-satisfactory, the composer can modify some input parameters and expect different results. The workflow for the system can be roughly diagramed in the following way:

Formal organization

An independent module facilitates the formal and textural organization of musical material. The process of formal determination is done in advance to the generation of musical material with the modules, and its outcome is a list containing the type of material (words, vowels, and consonants), time-scaling factor, and textural distribution of musical units that should be generated by the modules. This list assumes the role of a formal blueprint and serves as a guide to generate the sentences in the modules, join them, and concatenate them. The determining factors for formal constraints are based on the possible duration and scaling factor of each musical unit, the number of voices in which it can appear, and the type of material and its possibilities of combination in groups of two or three, depending on how many voices of the ensemble they appear on. Within the module, these calculations are done using stochastic methods based on probabilistic distributions and linear progressions. However, I will leave a detailed explanation of those processes out of this text.

Some examples of the rules used to determine the formal organization are:

- Presentations of materials can appear in duets or trios (E.G.: cons-vows; cons-words; vows-cons-words; etc.).

- Duos or trios cannot be composed of the same type of material.

- The sum of voices of each duet or trio of materials cannot exceed the number of voices determined for the vocal ensemble (by default there are five voices).

- Organize the pairs/trios based on the greatest possible contrast between the temporal scaling factors.

- Organize the pairs/trios as close as possible to the mean of the scalar factor. Example: (1 x 10) / 2 = 5.5.

“Outside” of the system

Some compositional decisions are made outside the system. In particular, those related to tempo, use of mouth shapes, whispered/spoken/sprechgesang, and character indication. It means two things for a compositional decision to be made “outside” the system. Initially, the specific musical parameter on which it operates has been left outside of a computational formalization. This might happen for several reasons, but mainly, due to the complexity of the implementation that would entail its computational formalization and compositional operation in this workflow. Second, the fact that most of this work can easily and efficiently be done “by hand” afterward. For example, a ritenuto at the end of a phrase, or the end of a section. At first glance, it seems like a marginal compositional space, but the reader will observe afterward how these actually became a fundamental part of the compositional work. Below, I will discuss some of the non-computational formalizations that happened from my side, “outside the system”.

Tempo

When deciding the tempo of a section, I mainly care about determining a pace that would allow a listener to follow the levels of musical information delivered by the piece at that moment. This varies largely, depending on the type of material presented and the density of the texture as indicated by the formal blueprint. In addition, the choice of faster/slower tempos is sometimes related to the general character of the section, which is indicated with text indications (more information on this later). The addition of rallentandos and accelerandos obeys mainly organic phrasing concerns and formal determination needs, for example, when a section ends, usually a rallentando is desired.

Dynamics

Clearly, a probabilistic methodology for generating a dynamic layer is agnostic of any type of phrase structure, or even more problematic, is independent of how these sound materials should be orchestrated in the texture (e.g., a given ppp dynamic for a group of unvoiced plosives together in the same phrase with a mf vowel choral material will likely cause “orchestration” problems, and these need to be later addressed “by hand”. The dynamic layer generator, as originally ideated, will be most of the time flawed from this orchestrational point of view. Although the result of this was not easy to imagine a priori, once heard live by the ensemble, decisions were to be made. For this, it was also important to have the feedback of the performers. Ultimately, the solution to the problem of dynamics had to happen “outside” of the system. In addition, the chosen set of dynamic possibilities seems now too restrictive, as some important are missing, for example, sforzatos. However, it seems to work relatively better when choosing dynamic distributions to phrases generated by each module according to their material composition, instead of choosing overall dynamic layers for each compound phrase.

Intonation/tunning

The system allows me to choose from different microtonal grids (this is more a feature of the roll/score objects of the Bach library, rather than a feature implemented by me for the versificator). Initially, I chose quarter tones as the default tone division. However, I maintained the flexibility to change the grid to semitones in some sections to facilitate some singing lines that were very complex due to microtonal leaps. I stuck to the original microtonal division in the sections where there are glissandos, as they are easier to sing in tune and the harmonic effects are more interesting.

The (illusion of) defiance

Some other compositional strategies were thought of as ways of breaking the logic of the system. Below I discuss three different cases. The first of them involves using some computational weighted randomness in the form of melodic perturbations, and the others involve no computational processes at all, rather, they come as somewhat arbitrary decisions based on my subjectivity and imaginary sonic representations of certain moments of the piece.

Case 1 – Statistical perturbations

After the first render of the piece, I realized that the sonification of vowels and their resulting chords were too static and repetitive, as the phonological components of the voiced sounds – which ultimately provide the material for the harmonic content – were limited and eventually, the chords started to repeat too often. Thus, in order to give the resulting chords some extent of variation, I decided to add some deviation in how the system would choose the pitches for each harmonic field. As a result, instead of mapping a 1-to-1 pitch for each formant, the system picks weighted random pitches based on a Gaussian probability distribution in which the original pitch is the highest point of the distribution. The width of the distribution (size of the range within pitches can be chosen) increases towards the higher voices. As a result, chords usually vary microtonally/tonally for each vowel.

Case 2 – Mouth shapes

There is a prominent apparition of mouth shape symbols, especially in the first section (m. 1–34). As it can be observed, in this section I only make use of consonant sounds. The idea of the mouth shapes came as a result of listening to a recording of a rehearsal of this section and finding that the overall sonority was quite static and unchanged. I thought that adding a layer of timbral transformation by changing the shape of the mouth while pronouncing the phonemes would add some richness and spontaneity to the texture, which to my judgment was absent. The addition of mouth shapes was done fully “outside of the system”.

Case 3 – Character indications

The sung character indications came to my mind following a vague (imaginary) semantic connection between the text in each section and an idea about what that text might mean in this imaginary language, and subsequently how it should be uttered. For some reason, the word “transchynklisys” (m. 39) sounded to me somewhat mysterious or metaphysical, the word “suphrinchy” (m. 46) more like a childish game, therefore it is asked to be sung as a toy, whereas the word “difponieance” (m. 69) sounded more solemn, or the words “homovirish abominish” (m. 135) sounded more religious, therefore I wanted them to be sung in a Palestrina-like style. Another example, starting on m. 91 comes the first vowel choral, and to me, this section for some reason gave me some psychedelic vibes, as a type of introspective trip under the effects of some psychoactive substance, where one can hear how words slow down and stretch. Therefore, the indication in the score states that this section should sound psychedelic and retro (60s-ish).

In summary, the character indications are rather unspecific, but they come as comments on my personal idea of how the overall sonority of the section should be. Sometimes, character indications involve a sense of theatricality (e.g. “as from radio news”, in m. 130). In some others, the singing technique changes dramatically, such as in m. 147 and 150. In every case, the result of their interpretation is almost unforeseen and open to what each performer understands from them.

Final reflections

After listening to the recording of the premiere of the piece several times, I found some nice-sounding moments, for example, the end of the section on page 13 of the score with the glottal trill – added outside the system – (bars 84–94), the intervention of the mezzo-soprano in bar 130 (“as from radio news”), or the very end of the piece. The material is in general very homogenous: the text based on phonetic rules gives it uniformity in its sonority, and the rhyming pseudowords give it a poetic-imaginary quality that has a certain charm. However, I must say that the interpretation by the performers of the character indications and those elements composed “outside of the system” is what gives the piece something, that otherwise would not have. As it can be observed, the overall compositional trajectory of the piece goes from almost no character indications to where the irrationality of the indications floods the whole texture around the climatic point, sometime around m. 150.

After this experience, I came to the conclusion that the work with automation and computational formalizations – at least as they were implemented in the versificator – seemed to me more like a starting point for a deeper compositional elucubration, which ultimately was oriented towards the addition of new layers and the addition of extra-musical ideas that were spilled into the piece, in particular, layers involving theatricality or more visual-gestural performing aspects. Even after a significant process of notation refinement and engraving, the piece as it emerges originally from the versificator seems to be far from finished. For it to be a complete piece, it is still necessary to generate and refine new layers of compositional development outside of the formalizations that the versificator operates. In this sense, the process of composition “outside of the system” became the key to making a valuable piece: a type of marginal – and why not liminal – compositional space at the borders of the system became the heart of the work. Without this, the piece would be plainly not worth of performance in concert.

What has become clear to me is that the piece’s artistic individuality lies far beyond computational formalizations. This realization prompts several questions: Is the purpose of automated composition merely to demonstrate a concept, or does it hold real artistic value? How many times must the versificator repeat its process to produce the finest result? Could be the case thus, that computational formalizations might work just as musical “tipping points” to further explore? Is this lack of humanity in the outcome of the versificator what Orwell imagined as the alienating nature of the versificator? I leave it to the composition itself to manifest these inquiries and, in its own way, address some of them.

Link to the video of the premiere of the piece “Versificator – Render 3” by the Bergen-based vocal ensemble “Tabula Rasa”: https://www.youtube.com/watch?v=MGxBEbUMMt4&ab_channel=JuanVassallo

References

Grabe, Esther, Francis Nolan, and Low Ling. 2000. “Quantitative Characterizations of Speech Rhythm: Syllable-Timing in Singapore English.” Language and Speech 43 (4): 377–401.

Hillenbrand, James, Laura A. Getty, Michael J. Clark, and Kimberlee Wheeler. 1995. “Acoustic characteristics of American English vowels.” Journal of the Acoustical Society of America 97 (5): 3099–3111. doi.org/10.1121/1.411872.

Huxley, Aldous. 2013. Brave New World. London: Everyman’s Library.

Klatt, Dennis H. 1980. “Software for a cascade/parallel formant synthesizer.” The Journal of the Acoustical Society of America 67 (3): 971–995. doi.org/10.1121/1.383940.

Laurson, M. 1996. PatchWork: A Visual Programming Language and Some Musical Applications. Sibelius Academy.

Nolan, Francis, and Eva Liina Asu. 2009. “The pairwise variability index and coexisting rhythms in language.” Phonetica 66 (1–2): 64–77. doi.org/10.1159/000208931.

Orwell, George. 2023. 1984. Biblios.

Sandred, Örjan. 2017. The musical fundamentals of computer assisted composition. Winnipeg MB: Audiospective Media.

Sandred, Örjan. 2010. “PWMC, a Constraint-Solving System for Generating Music Scores.” Source: Computer Music Journal 34 (2): 8–24. https://about.jstor.org/terms.

Sandred, Örjan. 2009. “Approaches to Using Rules as a Composition Method.” Contemporary Music Review 28 (2): 149–165. doi.org/10.1080/07494460903322430.

Contributor

Juan Vassallo

Juan Vassallo (BMus, MA) is an Argentinian composer, pianist and media artist. Currently he is pursuing his PhD in Artistic Research at the University of Bergen (Norway). His music has been premiered internationally and awarded in competitions in France, China and Argentina. Currently integrates Azul 514, an experimental musical project based on the interaction between digital sound synthesis, instrumental improvisation and real-time processing of sound.